Advanced Ultrasound in Diagnosis and Therapy ›› 2023, Vol. 7 ›› Issue (2): 158-171.doi: 10.37015/AUDT.2023.230028

• Review Articles • Previous Articles Next Articles

Tairui Zhang, BSa,*( ), Linxue Qian, MDb

), Linxue Qian, MDb

Received:2023-04-08

Revised:2023-04-14

Accepted:2023-04-24

Online:2023-06-30

Published:2023-04-27

Contact:

Tairui Zhang, BS,

E-mail:TXZ057@student.bham.ac.uk

Tairui Zhang, BS, Linxue Qian, MD. ChatGPT Related Technology and Its Applications in the Medical Field. Advanced Ultrasound in Diagnosis and Therapy, 2023, 7(2): 158-171.

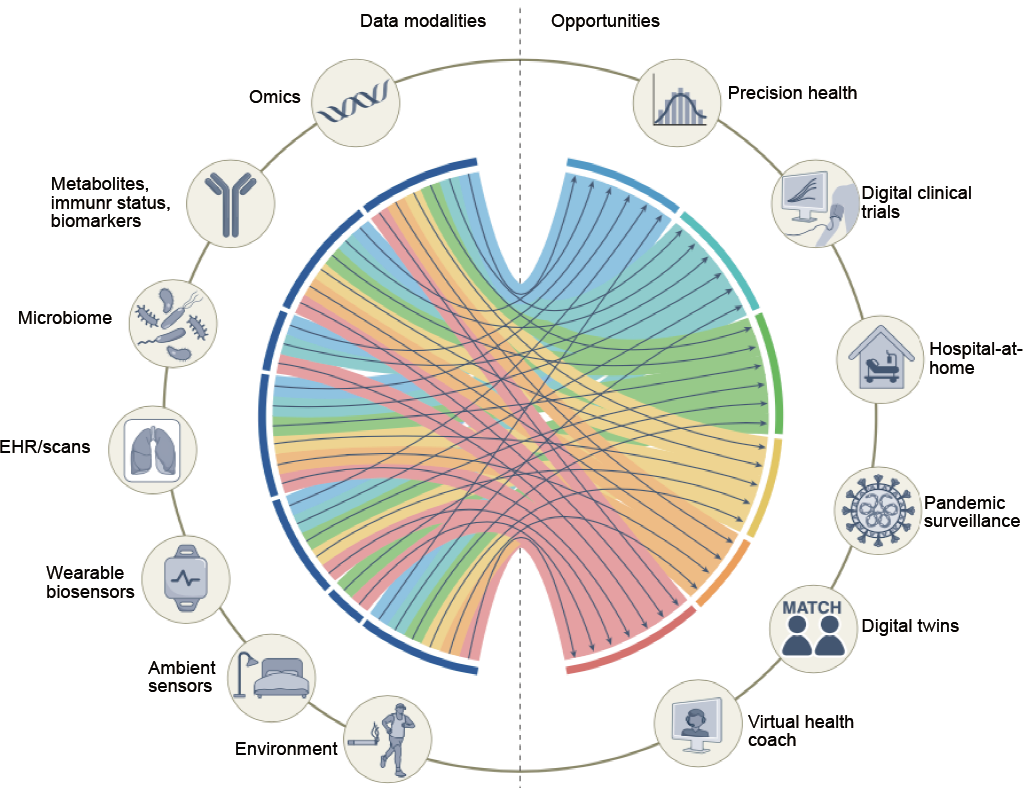

Figure 1

Multimodal data sources and opportunities applied in medica field. The large-scale multimodal models should be able to handle as many types of data that doctors can access in their clinical work as possible, even those that some doctors cannot access, such as some new disease data or some unpopular diseases. Unifying these data as the input of the large-scale multimodal model for processing, including data from medical sensors, genetic groups, proteomics, and so on. It can allow the AI model to fully consider multi-perspectives as much as possible, providing a multi-dimensional understanding of the disease, helping to make more accurate recommendations or decisions, and more effectively reduce doctors’ burden in daily clinical work [4]."

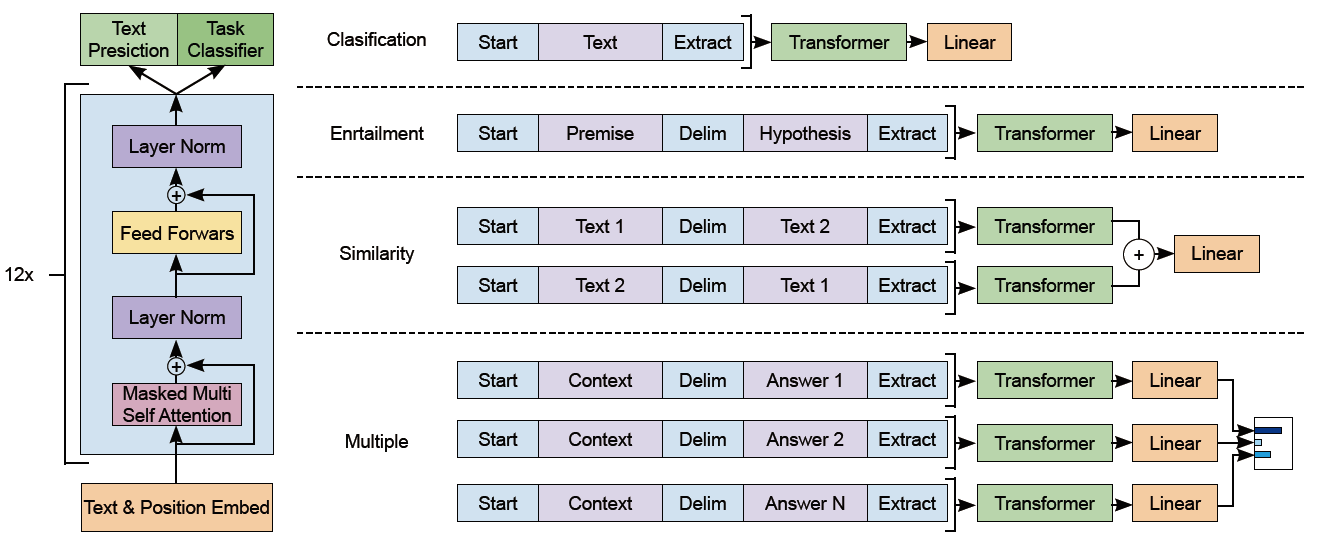

Figure 2

GPT-1 Model Structure. Perform the pre-training through the Transformer structure on the left, and then fine-tune through the linear and softmax layer structures according to different types of downstream tasks on the right. One disadvantage of this structure is that all downstream tasks require fine-tuning, which increases a lot of work during the use of the model [13]."

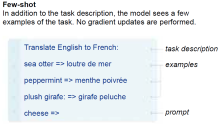

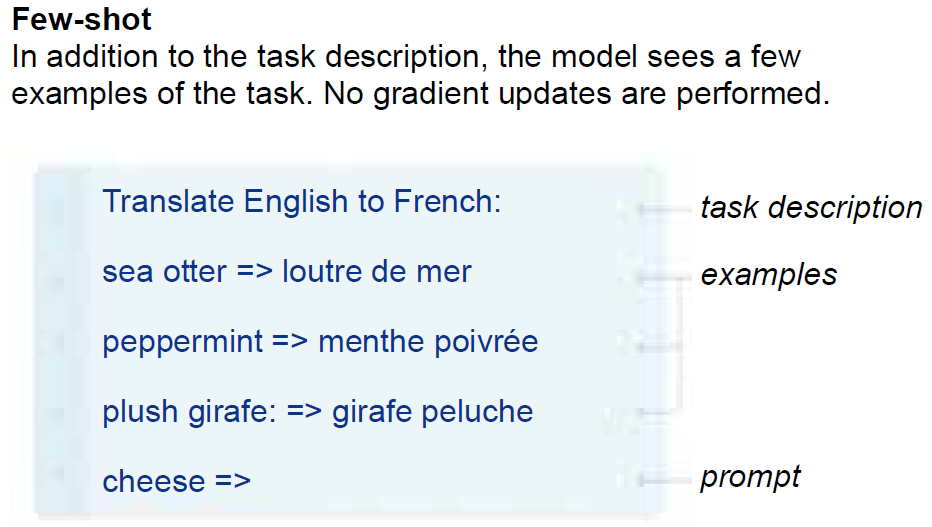

Figure 3

GPT-3 using few-shot learning for English to French translation. In-context learning is a concept introduced by GPT-3, which leads to the idea of prompt learning. Rather than changing parameters for different tasks, the model can use the inputs and outputs of the tasks as prompts, and generate predictions based on the test set inputs. The user guides the GPT-3 model to learn this “thinking style” by giving questions and answers related to the questioning style to the GPT-3 model, so that when solving similar problems in real situations, they can start to answer questions along the user desired thinking path [16]."

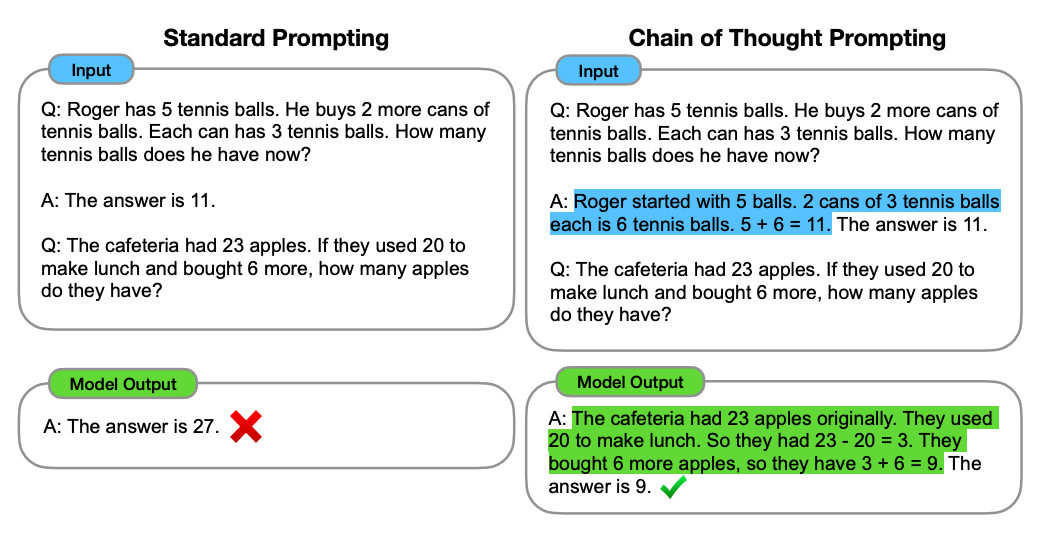

Figure 5

Using the method of chain-of-thought to guide the model to generate correct answers. Due to the use of few-shot learning method in this case, the model imitates the processing in the similar questioning and logical example given above and transforms the new and complex problems into each step of simple but basic according to the chain-of-thought. This method enables the model to learn how to logically decompose complex problems into simple steps and tackle them respectively. The advantage of doing so is to improve the generalization ability of the model in tasks that require strong logic, such as solving mathematical problems [20]."

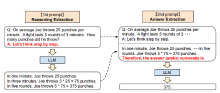

Figure 6

Two-stage prompt process demonstration. GPT-3 can produce better solutions to mathematical problems when prompted with “Let's think step by step”, showing the ability of chain-of-thought. This method can help the model generate more accurate results when facing complex problems and limited sample sizes situations [20]."

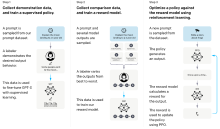

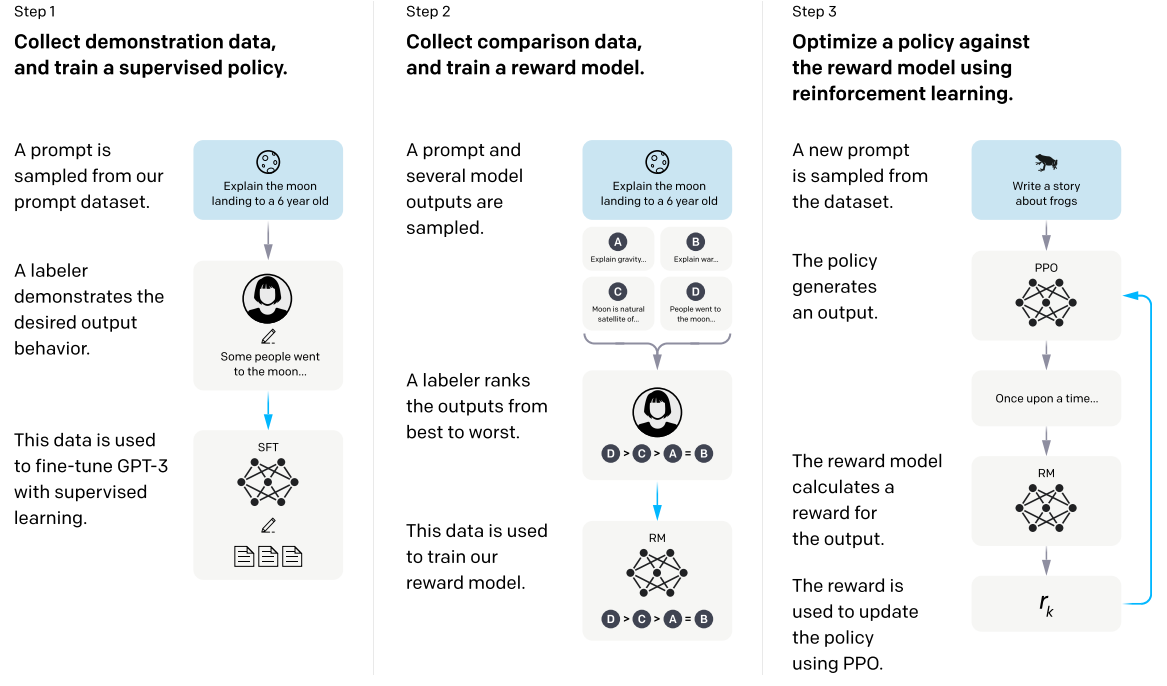

Figure 7

InstructGPT Training Process. The training process of InstrumentGPT consists of three steps [2]. They used a PPO (Proximal Policy Optimization) [22] network to produce outputs. The reward feedback model gives feedback to the PPO network. These steps were repeated until the InstrumentGPT model aligns with human values [2]."

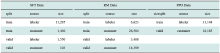

Figure 8

The volume and distribution of the data were trained by InstructGPT. InstructGPT useed a small amount of labeled data by human for training, but it shows a high robustness that aligns with human values, making this method more feasible for medical scenarios with less labor cost [2]."

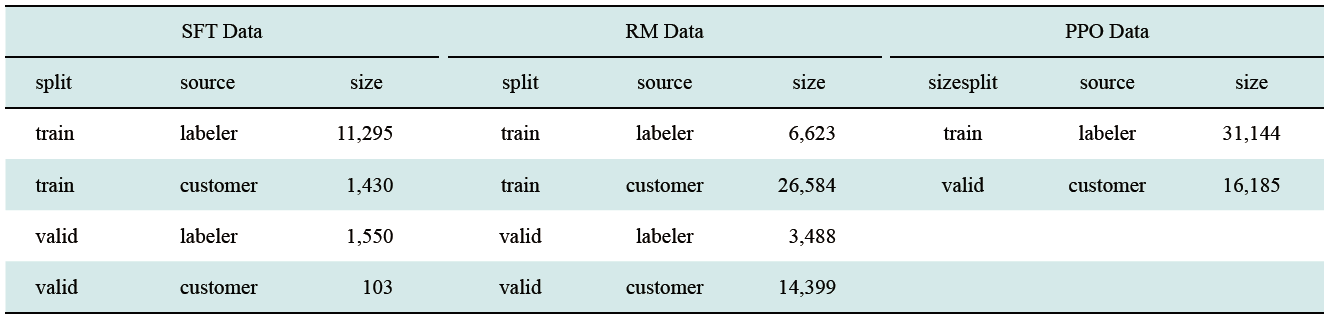

Figure 9

Comparison of pretraining fine tuning, prompting, and instruction tuning. Prompting and instruction tuning differ in how they activate the language models' abilities. Prompting triggers the completion ability of the models by needing few samples to fine-tune them for a downstream task. However, instruction tuning triggers the understanding ability of the language models and enables them to learn from the multiple downstream tasks. The model can follow more explicit instructions from users without fine-tuning on few samples, which improves their generalization ability across various tasks [23]."

Figure 10

Schematic diagram of pre-training and fine-tuning stages of BioGPT. First, It splits biomedical text into words and turns each word into a word embedding vector for the input layer. Then it passes them through multi-layer one-way Transformer network to get a context sensitive hidden layer representation for each word. In the pre-training stage, the model parameters are optimized using the autoregressive language model objective function. The model predicts the next word by giving a text sequence. In the fine-tuning stage, a specific output layer is added depending on the downstream tasks, and the model parameters are fine-tuned by the task related data and objective functions [27]."

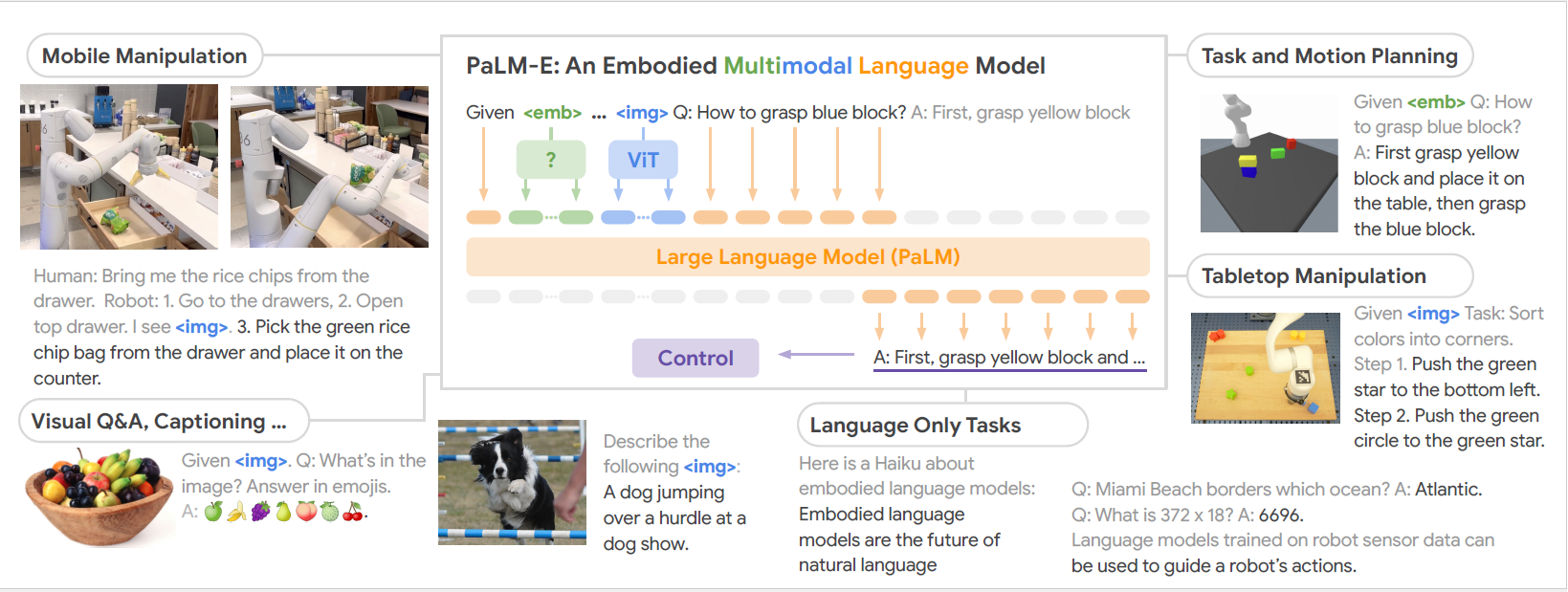

Figure 11

Schematic diagram of working strategy of PaLM-E and applicable into downstream tasks. PaLM-E can use visual data to improve its language processing skills, create language that explains images, and give instructions to control robots to do complex tasks. PaLM-E is a decoder only LLM that can produce text completion in an autoregressive way with a given prefix or prompt. It uses multimodal statements that have visual, continuous state estimation, text and so on as its training data [28]."

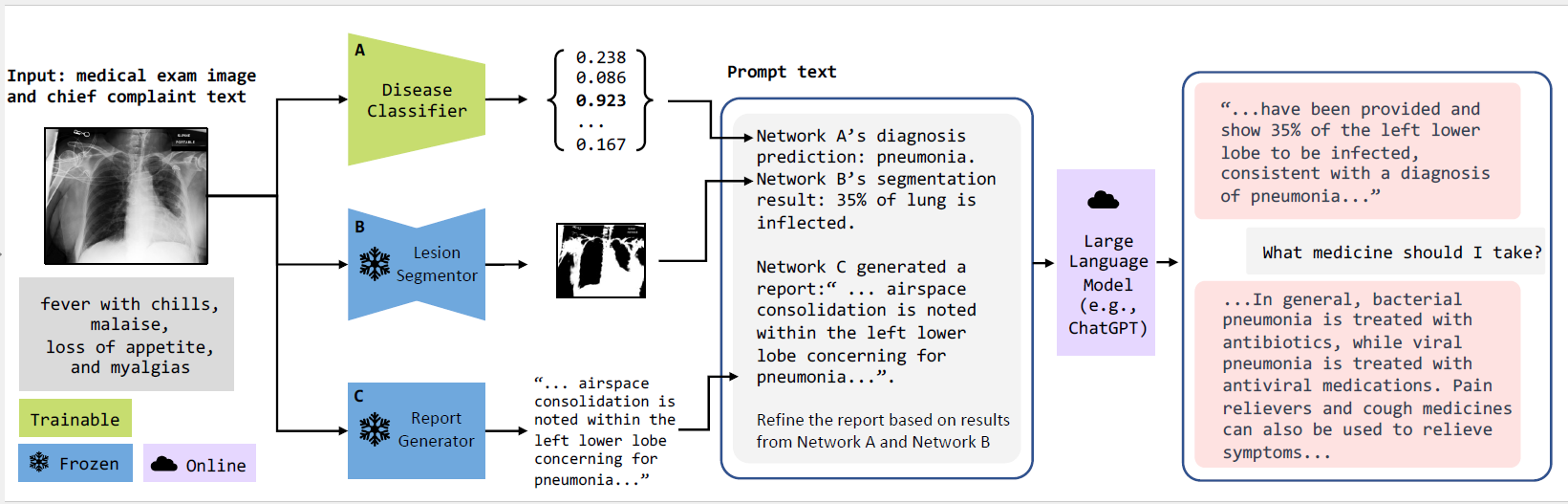

Figure 12

The stratrgies and mainly structure of ChatCAD, which leverages the medical domain knowledge combining the logical reasoning skills of LLMs and the computer vision comprehension competence of existing medical image CAD model to assist doctors having a all-round understanding of symptoms and improve diagnostic process along with better therapeutic effectiveness [32]."

| [1] | Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, et al. Attention is all you need. Advances in Neural Information Processing Systems 2017;5998-6008. |

| [2] | Ouyang L, Wu J, Jiang X, Almeida D, Wainwright CL, Mishkin P, et al. Training language models to follow instructions with human feedback. arXiv preprint arXiv 2022;2203.02155. |

| [3] | Bommasani R, Hudson DA, Adeli E, Altman R, Arora S, Arx SV, et al. On the opportunities and risks of foundation models. arXiv preprint arXiv 2021;2108.07258. |

| [4] |

Acosta JN, Falcone GJ, Rajpurkar P, Topol EJ. Multimodal biomedical AI. Nature Medicine 2022; 28:1773-1784.

doi: 10.1038/s41591-022-01981-2 pmid: 36109635 |

| [5] | Dong L, Xu S, Xu B. Speech-transformer: a no-recurrence sequence-to-sequence model for speech recognition. 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2018;5884-5888. |

| [6] | Li N, Liu S, Liu Y, Zhao S, Liu M. Neural speech synthesis with transformer network. Proceedings of the AAAI Conference on Artificial Intelligence 2019; 33:6706-6713. |

| [7] | Vila LC, Escolano C, Fonollosa J AR, Costa-jussa MR. End-to-end speech translation with the transformer. IberSPEECH 2018;60-63. |

| [8] | Topal M O, Bas A, van Heerden I. Exploring transformers in natural language generation: Gpt, bert, and xlnet. arXiv preprint arXiv 2021;2102.08036. |

| [9] | Gao X, Qian Y, Gao A. COVID-VIT: classification of COVID-19 from CT chest images based on vision transformer models. arXiv preprint arXiv 2021;2107.01682. |

| [10] | Costa G S S, Paiva A C, Junior G B, Ferreira MM. COVID-19 automatic diagnosis with CT images using the novel transformer architecture. Anais Do XXI Simpósio Brasileiro De Computação Aplicada À Saúde 2021:293-301. |

| [11] | Zhang Z, Sun B, Zhang W. Pyramid medical transformer for medical image segmentation. arXiv preprint arXiv 2021;2104.14702. |

| [12] |

Manning C D. Human language understanding & reasoning. Daedalus 2022; 151:127-138.

doi: 10.1162/daed_a_01905 |

| [13] | Radford A, Narasimhan K, Salimans T, Sutskever I. Improving language understanding by generative pre-training. OpenAI Blog 2018. |

| [14] | Radford A, Wu J, Child R, Luan D, Amodei D, Sutskever I. Language models are unsupervised multitask learners. OpenAI Blog 2019. |

| [15] | Larochelle H, Erhan D, Bengio Y. Zero-data learning of new tasks. AAAI 2008; 1:646-651. |

| [16] | Brown T, Mann B, Ryder N, Subbiah M, Kaplan JD, Dhariwal P. Language models are few-shot learners. Advances in Neural Information Processing Systems 2020; 33:1877-1901. |

| [17] |

Patel S B, Lam K. ChatGPT: the future of discharge summaries? The Lancet Digital Health 2023; 5:e107-e108.

doi: 10.1016/S2589-7500(23)00021-3 |

| [18] | Rae JW, Borgeaud S, Cai T, Millican K, Hoffmann J, Song F, et al. Scaling language models: methods, analysis & insights from training gopher. arXiv preprint arXiv 2021;2112.11446. |

| [19] | Nye M, Andreassen AJ, Gur-Ari G, Michalewski H, Austin J, Bieber D, et al. Show your work: scratchpads for intermediate computation with language models. arXiv preprint arXiv 2021;2112.00114. |

| [20] | Kojima T, Gu SS, Reid M, Matsuo Y, Iwasawa Y. Large language models are zero-shot reasoners. arXiv preprint arXiv 2022;2205.11916. |

| [21] | Christiano P F, Leike J, Brown T, Martic M, Legg S, Amodei D. Deep reinforcement learning from human preferences. Advances in Neural Information Processing Systems 2017;4302-4310. |

| [22] | Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O. Proximal policy optimization algorithms. arXiv preprint arXiv 2017;1707.06347. |

| [23] | Wei J, Bosma M, Zhao VY, Guu K, Yu AW, Lester B, et al. Finetuned language models are zero-shot learners. arXiv preprint arXiv 2021;2109.01652. |

| [24] | Zhang Z, Zhang A, Li M, Smola A. Automatic chain of thought prompting in large language models. arXiv preprint arXiv 2022;2210.03493. |

| [25] | 25Vemprala S, Bonatti R, Bucker A, Kapoor A. ChatGPT for robotics: design principles and model abilities. Microsoft 2023. |

| [26] | OpenAI. GPT-4 technical report. arXiv preprint arXiv 2023;2303.08774. |

| [27] | Luo RQ, Sun LA, Xia YC, Qin T, Zhang S, Poon H, et al. BioGPT: generative pre-trained transformer for biomedical text generation and mining. arXiv preprint arXiv 2023;2210.10341. |

| [28] | Driess D, Xia F, Sajjadi MSM, Lynch C, Chowdhery A, Ichter B, et al. PaLM-E: an embodied multimodal language model. arXiv preprint arXiv 2023;2303.03378. |

| [29] |

Korngiebel D M, Mooney S D. Considering the possibilities and pitfalls of Generative Pre-trained Transformer 3 (GPT-3) in healthcare delivery. NPJ Digital Medicine 2021; 4:93.

doi: 10.1038/s41746-021-00464-x pmid: 34083689 |

| [30] |

Kung TH, Cheatham M, Medenilla A, Sillos C, Leon LD, Elepaño C, et al. Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. PLOS Digital Health 2023; 2:e0000198.

doi: 10.1371/journal.pdig.0000198 |

| [31] |

Agbavor F, Liang H. Predicting dementia from spontaneous speech using large language models. PLOS Digital Health 2022; 1: e0000168.

doi: 10.1371/journal.pdig.0000168 |

| [32] | Wang S, Zhao Z, Ouyang X, Wang Q, Shen DG. ChatCAD: interactive computer-aided diagnosis on medical image using large language models. arXiv preprint arXiv 2023;2302.07257. |

| [1] | Enze Qu, MD, Xinling Zhang, MD. Advanced Application of Artificial Intelligence for Pelvic Floor Ultrasound in Diagnosis and Treatment [J]. Advanced Ultrasound in Diagnosis and Therapy, 2023, 7(2): 114-121. |

| [2] | Tianxiang Li, BS, Fei Ji, BS, Ruina Zhao, MD, Huazhen Liu, MD, Meng Yang, MD. Advances in the Research of Ultrasound and Artificial Intelligence in Neuromuscular Disease [J]. Advanced Ultrasound in Diagnosis and Therapy, 2023, 7(2): 122-129. |

| [3] | Rui Chen, MM, Fangqi Guo, MM, Jia Guo, MD, Jiaqi Zhao, MD. Application and Prospect of AI and ABVS-based in Breast Ultrasound Diagnosis [J]. Advanced Ultrasound in Diagnosis and Therapy, 2023, 7(2): 130-135. |

| [4] | Cancan Cui, MD, Zhaojun Li, PhD, Yanping Lin, PhD. Advances in Intelligent Segmentation and 3D/4D Reconstruction of Carotid Ultrasound Imaging [J]. Advanced Ultrasound in Diagnosis and Therapy, 2023, 7(2): 140-151. |

| [5] | Wenjun Zhang, MD, Mi Zhou, PhD, Qingguo Meng, MD, Lin Zhang, MS, Xin Liu, MS, Paul Liu, PhD, Dong Liu, PhD. Rapid Screening of Carotid Plaque in Cloud Handheld Ultrasound System Based on 5G and AI Technology [J]. Advanced Ultrasound in Diagnosis and Therapy, 2023, 7(2): 152-157. |

| [6] | Mofan Li, Yongyue Zhang, MM, Yang Sun, MM, Ligang Cui, PhD, Shumin Wang, PhD. AI-based ChatGPT Impact on Medical Writing and Publication [J]. Advanced Ultrasound in Diagnosis and Therapy, 2023, 7(2): 188-192. |

| [7] | Won-Chul Bang, PhD, Vice President, Yeong Kyeong Seong, PhD, Jinyong Lee. The Impact of Deep Learning on Ultrasound in Diagnosis and Therapy: Enhancing Clinical Decision Support, Workflow Efficiency, Quantification, Image Registration, and Real-time Assistance [J]. Advanced Ultrasound in Diagnosis and Therapy, 2023, 7(2): 204-216. |

| [8] | Siyi Xun, MA, Wei Ke, PhD, Mingfu Jiang, MA, Huachao Chen, BA, Haoming Chen, BA, Chantong Lam, PhD, Ligang Cui, MD, Tao Tan, PhD. Current Status, Prospect and Bottleneck of Ultrasound AI Development: A Systemic Review [J]. Advanced Ultrasound in Diagnosis and Therapy, 2023, 7(2): 61-72. |

| [9] | Wenjia Guo, MM, Shengli Li, MM, Xing Yu, MD, Huaxuan Wen, BM, Ying Yuan, MM, Xia Yang, MM. Artificial Intelligence in Prenatal Ultrasound: Clinical Application and Prospect [J]. Advanced Ultrasound in Diagnosis and Therapy, 2023, 7(2): 82-90. |

| [10] | Jiaqi Zhao, MD, Weiqing Li, MD, Xiaolin Ma, MD, Rui Chen, MD, Lin Chen, MD. Incidental Ultrasound Findings of a Giant Retroperitoneal Schwannoma: A Case Study [J]. Advanced Ultrasound in Diagnosis and Therapy, 2022, 6(2): 64-67. |

| [11] | Xiaoting Zhang, BS, Zhifei Dai, PhD. Micro/Nanobubbles Driven Multimodal Imaging and Theragnostics of Cancer [J]. Advanced Ultrasound in Diagnosis and Therapy, 2021, 5(3): 163-172. |

| [12] | Yaoting Wang, MD, Huihui Chai, MD, Ruizhong Ye, MD, Jingzhi Li, MD, PhD, Ji-Bin Liu, MD, Chen Lin, Chengzhong Peng, MD. Point-of-Care Ultrasound: New Concepts and Future Trends [J]. Advanced Ultrasound in Diagnosis and Therapy, 2021, 5(3): 268-276. |

| [13] | Shuo Wang, BS, Ji-Bin Liu, MD, Ziyin Zhu, MD, John Eisenbrey, PhD. Artificial Intelligence in Ultrasound Imaging: Current Research and Applications [J]. Advanced Ultrasound in Diagnosis and Therapy, 2019, 3(3): 53-61. |

| Viewed | ||||||

|

Full text |

|

|||||

|

Abstract |

|

|||||

Share: WeChat

Copyright ©2018 Advanced Ultrasound in Diagnosis and Therapy

|

Advanced Ultrasound in Diagnosis and Therapy (AUDT)

is licensed under a Creative Commons Attribution 4.0 International License.

Advanced Ultrasound in Diagnosis and Therapy (AUDT)

is licensed under a Creative Commons Attribution 4.0 International License.